12.1.0.2 introduced the new Columnar Flash Cache where 1MB of blocks that are all in HCC format are rewritten so as to make each column from each CU contiguous. This works by first writing all the block headers to an array, then writing all the CU headers to an array, finally writing all the Column 1 column-level-CUs, then writing all the Column2 column-level-CUs etc.

The flash cache hash table maintains a simple mapping of column numbers to 64KB flash pages so, for any given query, we can simply do asynchronous disk I/O of the minimum set of 64KB pages required to cover the referenced columns.

Within the “flashcache” cell stats there is a new nested cell stat called “columnarcache” that enables you to track how it is working.

> set long 50000000

> set pagesize 10000

> select xmltype(STATISTICS_VALUE).getclobval(2,2) from v$cell_state;

XMLTYPE(STATISTICS_VALUE).GETCLOBVAL(2,2)

--------------------------------------------------------------------------------

....

<stats type="flashcache">

<stats type="columnarcache">

<stat name="columnar_cache_size">0</stat>

<stat name="columnar_cache_keep_size">0</stat>

<stat name="columnar_cache_keep_read">0</stat>

<stat name="columnar_cache_keep_read_bytes">0</stat>

<stat name="columnar_attempted_read">0</stat>

<stat name="columnar_attempted_read_bytes">0</stat>

<stat name="columnar_cache_hits">0</stat>

<stat name="columnar_cache_hits_read_bytes">0</stat>

<stat name="columnar_cache_hits_saved_bytes">0</stat>

<stat name="columnar_cache_pop">0</stat>

<stat name="columnar_cache_pop_bytes">0</stat>

<stat name="columnar_cache_pop_throttled">0</stat>

<stat name="columnar_cache_pop_invalid_buf">0</stat>

<stat name="columnar_cache_pop_size_align">0</stat>

<stat name="columnar_cache_pop_findchdrfailure_mainchdr">0</stat>

<stat name="columnar_cache_pop_findchdrfailure_childchdr">0</stat>

</stats>

</stats>

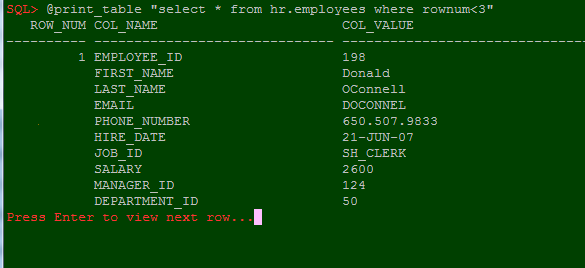

I typically spool the output of this to wade through it an editor but if we want to monitor how it is working with some workload, we need to extract individual stats, for example I bounced the cells and verified the cache was empty:

> select xmlcast(xmlquery('/cell_stats/stats/stats/stat[@name="columnar_cache_size"]' passing xmltype(STATISTICS_VALUE) returning content) as varchar2(200) ) "COLUMNAR CACHE SIZE"

from v$cell_state

where xmlexists('/cell_stats/stats/stats[@type="columnarcache"]' passing xmltype(STATISTICS_VALUE));

COLUMNAR CACHE SIZE

--------------------------------------------------------------------------------

0

I am using the 1GB TPC-H schema which takes a little more 400MB on disk when compressed with Query Low:

SQL> select sum(bytes) from user_segments where SEGMENT_NAME in ('SUPPLIER','PARTSUPP','LINEITEM','ORDERS','PART','CUSTOMER');

SUM(BYTES)

----------

420675584

and checking the columnar cache again shows about half of the data has been rewritten into columnar cache format instead of caching raw blocks:

SQL> select xmlcast(xmlquery('/cell_stats/stats/stats/stat[@name="columnar_cache_size"]' passing xmltype(STATISTICS_VALUE) returning content) as varchar2(200) ) "COLUMNAR CACHE SIZE"

from v$cell_state

where xmlexists('/cell_stats/stats/stats[@type="columnarcache"]' passing xmltype(STATISTICS_VALUE));

COLUMNAR CACHE SIZE

--------------------------------------------------------------------------------

179306496

So let’s look at how the cache helped:

select

xmlcast(

xmlquery(

'/cell_stats/stats/stats/stat[@name="columnar_cache_hits_read_bytes"]'

passing xmltype(STATISTICS_VALUE)

returning content

) as varchar2(200)

) "HIT READ BYTES"

from v$cell_state

where xmlexists('/cell_stats/stats/stats[@type="columnarcache"]' passing xmltype(STATISTICS_VALUE));

HIT READ BYTES

--------------------------------------------------------------------------------

1909456896

select

xmlcast(

xmlquery(

'/cell_stats/stats/stats/stat[@name="columnar_cache_hits_saved_bytes"]'

passing xmltype(STATISTICS_VALUE)

returning content

) as varchar2(200)

) "HIT SAVED BYTES"

from v$cell_state

where xmlexists('/cell_stats/stats/stats[@type="columnarcache"]' passing xmltype(STATISTICS_VALUE));

HIT SAVED BYTES

--------------------------------------------------------------------------------

1128267776

which shows we were able to eliminate about 35% of the disk I/O for this query run!

We could, of course, have gotten that information more simply with the regular stat “cell physical IO bytes saved by columnar cache” but I wanted to show how to pull values from v$cell_state for use in scripts.

Many people only use Query High compression as they find the increased disk I/O from Query Low more than offsets the savings from cheaper decompression costs. However, with the columnar cache in place, those trade-offs have changed. It may be worth re-evaluating the decision as to when user Query Low vs. Query High particularly on CPU-bound cells.

Addendum: performance bug 20525311 affecting the columnar cache with selective predicates is fixed in the next rpm.