When you work with a large number of Real-Time SQL Monitor (RTSM) reports in the ACTIVE format (the interactive HTML report with JavaScript), it quickly becomes inconvenient to open them one by one in a browser. Very often you want to load them into the database, store them, index them, and analyze them in bulk.

Some RTSM reports are easy to process — for example, those exported directly from EM often contain a plain XML payload that can be extracted and parsed with XMLTABLE().

But most ACTIVE reports do not store XML directly.

Instead, they embed a base64-encoded and zlib-compressed XML document inside a <report> element.

These reports typically look like this:

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=utf-8"/>

<script ...>

var version = "19.0.0.0.0";

...

</script>

</head>

<body onload="sendXML();">

<script id="fxtmodel" type="text/xml">

<!--FXTMODEL-->

<report db_version="19.0.0.0.0" ... encode="base64" compress="zlib">

<report_id><![CDATA[/orarep/sqlmonitor/main?...]]></report_id>

eAHtXXtz2ki2/38+hVZ1a2LvTQwS4pXB1GJDEnYc8ALOJHdrSyVA2GwAYRCOfT/9

...

ffUHVA==

</report>

<!--FXTMODEL-->

</script>

</body>

</html>

At first glance it’s obvious what needs to be done:

- Extract the base64 block

- Decode it

- Decompress it with zlib

- Get the original XML

<sql_monitor_report>...</sql_monitor_report>

And indeed — if the database had a built-in zlib decompressor, this would be trivial.

Unfortunately, Oracle does NOT provide a native zlib inflate function.

UTL_COMPRESScannot be used — it expects Oracle’s proprietary LZ container format, not a standard zlib stream.- There is no PL/SQL API for raw zlib/DEFLATE decompression.

- XMLType, DBMS_CRYPTO, XDB APIs also cannot decompress zlib.

Because the RTSM report contains a real zlib stream (zlib header + DEFLATE + Adler-32), Oracle simply cannot decompress it natively.

Solution: use Java stored procedure

The only reliable way to decompress standard zlib inside the database is to use Java.

A minimal working implementation looks like this:

InflaterInputStream inflaterIn = new InflaterInputStream(in);

InflaterInputStream with default constructor expects exactly the same format that RTSM uses.

I created a tiny Java helper ZlibHelper that inflates the BLOB directly into another BLOB.

It lives in the database, requires no external libraries, and works in all Oracle versions that support Java stored procedures.

Source code: https://github.com/xtender/xt_scripts/blob/master/rtsm/parsing/ZlibHelper.sql

PL/SQL API: PKG_RTSM

On top of the Java inflater I wrote a small PL/SQL package that:

- Extracts and cleans the base64 block

- Decodes it into a BLOB

- Calls Java to decompress it

- Returns the resulting XML as CLOB

- Optionally parses it with

XMLTYPE

Package here:

pkg_rtsm.sql

https://github.com/xtender/xt_scripts/blob/master/rtsm/parsing/pkg_rtsm.sql

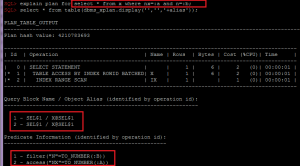

This allows you to do things like:

xml:=xmltype(pkg_rtsm.rtsm_html_to_xml(:blob_rtsm));

Or load many reports, store them in a table, and analyze execution statistics across hundreds of SQL executions.

The next part of this series explains how to parse the extracted XML and work with the SQL Monitor data directly: Parsing RTSM(Real-Time SQL Monitor) XML Reports